A recent challenge I have faced is the need to write AWS Service Logs to CloudWatch where the AWS Service did not write those Logs to CloudWatch natively; supporting only the S3 Service.

The service in question was RDS for Microsoft SQL Server. Whilst standard logs can be written to CloudWatch by RDS for Microsoft SQL Server, Audit Logs cannot.

I, therefore, needed to find a way to get the logs from S3 to CloudWatch – without duplicates.

This article describes one such approach to solving this problem.

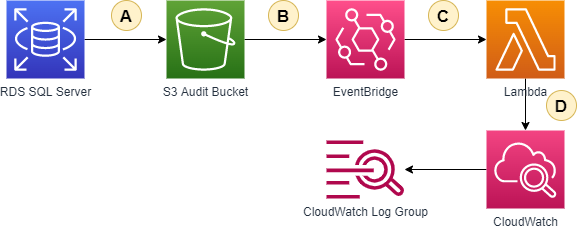

The AWS Services required are shown below:

The following describes what happens at each stage of this example solution

A – SQL Server Exports Audit to S3

RDS SQL Server supports natively exporting Audit Logs to S3. These configuration options can be set when your database is created using custom option settings. More information on this can be found in AWS Documentation.

When an audit record is created it is written to S3

Note: Versioning must be enabled on the bucket to support the delta capability described in C.

B – S3 Triggers EventBridge

The next step is to create an Event on the S3 Bucket using EventBridge. This Trigger will look for objects being created (note: in S3 the “Object Created” event also covers the update of an object – technically the same thing in AWS).

An example Event Pattern is shown below:

{

"source": ["aws.s3"],

"detail-type": ["Object Created"],

"detail": {

"bucket": {

"name": ["smcm-delta-test"]

}

}

}C – EventBridge calls Lambda

EventBridge then calls Lambda and provides the details of the file that has been updated.

When the Lambda is triggered it does the following:

- If the file is new (i.e. no previous version), the entire file is prepped for logging in CloudWatch;

- If the file is a new version then Lambda reads the new version of the file and compares this file to the previous version of the file. The delta between the two files is then prepped for logging in CloudWatch

- All records found in the file, or the delta, are then written to CloudWatch, using sequence tokens where relevant)

- Note: Lambda will create the Log Stream if it does not already exist.

Why compare versions?

I wanted the Lambda to be a generic Lambda that could handle scenarios where the same file was updated multiple times (i.e. as additional records are added to the file). If a Delta approach was not taken, then the same records would be logged multiple times in CloudWatch.

D – Lambda Writes to CloudWatch

Finally, every record is written to CloudWatch.

Additional context is added to the CloudWatch log entry by also specifying the file name and file version that contained the record(s). For example:

{

"record": "Mercedes",

"file": "BucketTest.txt",

"fileVersion": "bBrrUuwxWjnJPCseknf4VSDlFGNC1Nbr"

}In the above example, the “record” is the line in the file that was not in the last version of the file, or that exists in the newly created file.

Source Code for Lambda Function

To help you, I have written example Python code that you can use in your own Lambda today. Please click the image below.

Please feel free to raise PRs against this repo for any code improvements you wish to make!

https://github.com/mcmastercloud/s3DeltaCloudwatchLoaderLambda

Note: This article is an opinion and does not constitute professional advice – any actions taken by a reader based on this article are at the discretion of the reader, who is solely responsible for the outcome of those actions.